Meta Data

Digifi’s ETL engine records meta data statistics for each snapshot so you can easily search for fields within large data sets, track changes across snapshots, and quickly identify errors within your data. The result is a significant improvement in business awareness and analytic accuracy.

How it Works

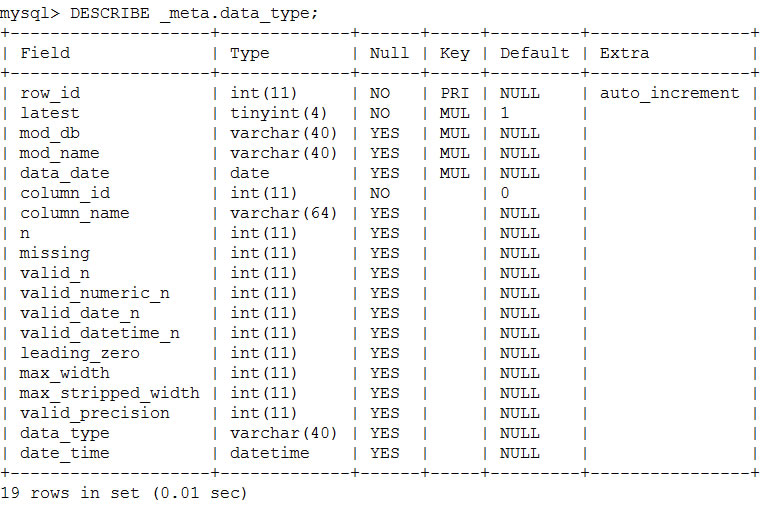

Each load process goes through a set of routines to inspect, classify, rename and clean data to ensure a high level of analytic readiness. One step in this process is to determine the data type of a given data field. This is done by calculating statistics on each field and then applying heuristics to determine the appropriate data type for the given field. Each module, field, data type classification and corresponding statistics are stored in the `_meta` database as table `data_type`. You can view the structure of the table with the command:

Search

In data mining exercises it is common to have 500 – 2,000 different candidate variables. To quickly find variables by name or string pattern, use the following query:

This example also demonstrates how to use meta data statistics to find anomalies in your data or areas where user definitions may need clarification. The field `production_deadline`, for instance, seems like it should be a date field with 30% of the valid values conforming to a standard date format. However, there is a large overall percentage of missing cases and the maximum width far exceeds a date format. Indeed, if this field is to provide business value, it requires additional work in its business definition and process requirements.

Data Type Changes

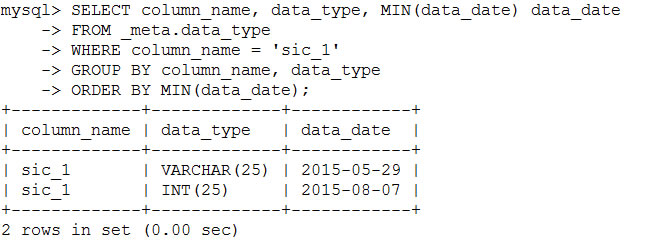

Data meaning and definitions tend to change over time. Without strict data governance, it is difficult to catch when business or process changes alter the capture of information and, potentially, the semantic meaning of the data itself. One way to mitigate this risk and to increase vigilance is by tracking fields that experience changes in their data type classification. A simple query can be used to isolate changes:

FROM (SELECT DISTINCT column_name, data_type FROM _meta.data_type) A

GROUP BY column_name HAVING COUNT(*) > 1;

The result (not shown) is a list of fields that have experienced data type changes over time. This can point you to changes in user input behavior and possibly changes in the business meaning of the data. You can see the change for a given field with the logic:

FROM _meta.data_type

WHERE column_name = ‘sic_1’

GROUP BY column_name, data_type

ORDER BY MIN(data_date)

In this example, SIC_1 changed its data type from VARCHAR to INTEGER. The change in data type signaled a change in process that was not advertised to the analytics team before hand.

Structural Changes

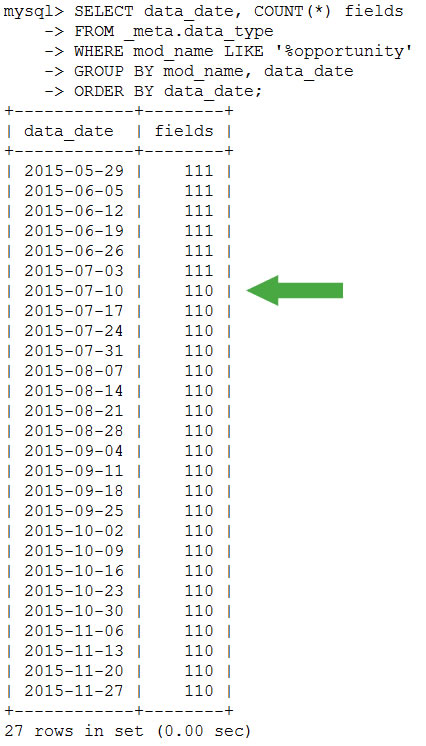

It’s also important to note when fields are added or go missing from your analytic mart. Again, this usually indicates changes in process or business direction. Digifi’s smart ETL will automatically adjust database objects when adding new fields and will ignore missing fields. The status of both actions is reported in the ETL log files. Use the following query to track or visualize changes over time:

FROM _meta.data_type

WHERE mod_name LIKE ‘%opportunity’

GROUP BY mod_name, data_date

ORDER BY data_date

In this example you can see that a field was dropped from the weekly `opportunity` snapshot on July 10, 2015.